AI Inputs & Feedback in a Desktop Design App —

Info

- Desktop App

- Role: UX Researcher, Product Designer

- 14 Weeks

- May - August 2023

- Solo Project

Tools

- Figma

- RunwayML

Overview

FontFacing is a desktop app concept for typography design driven by AI that analyzes your inputs and creates a training model as you work. When you make changes to the path of one letterform, FontFacing will analyze the change and reproduce it, if necessary, to the rest of the typeface. FontFacing represents the possibility of AI to vastly streamline the processes of repetitive tasks without taking away human agency or control.

After completing the project, OpenAI added 2 noteworthy features to ChatGPT which validate, in part, the insights and direction of this project. For more details, see below.

Problem

- Designing all of the glyphs of a typeface is a laborious and repetitive process that can take several weeks or even months to complete.

- Ensuring consistency across letterforms is a time-intensive process and, through development, there are limited opportunities to experiment, iterate, and refine possibilities.

- Current integration of AI features into existing design tools is limited, at best, and intelligent refinement has to be done manually.

Goals

- Reduce the manual workload of typeface design by utilizing the strengths of AI and machine learning's pattern recognition.

- Utilize AI to maintain stylistic rules across characters to reduce repetitive adjustments.

- Provide designers clear modes and controls so they can influence or override AI decisions whenever necessary.

Solution

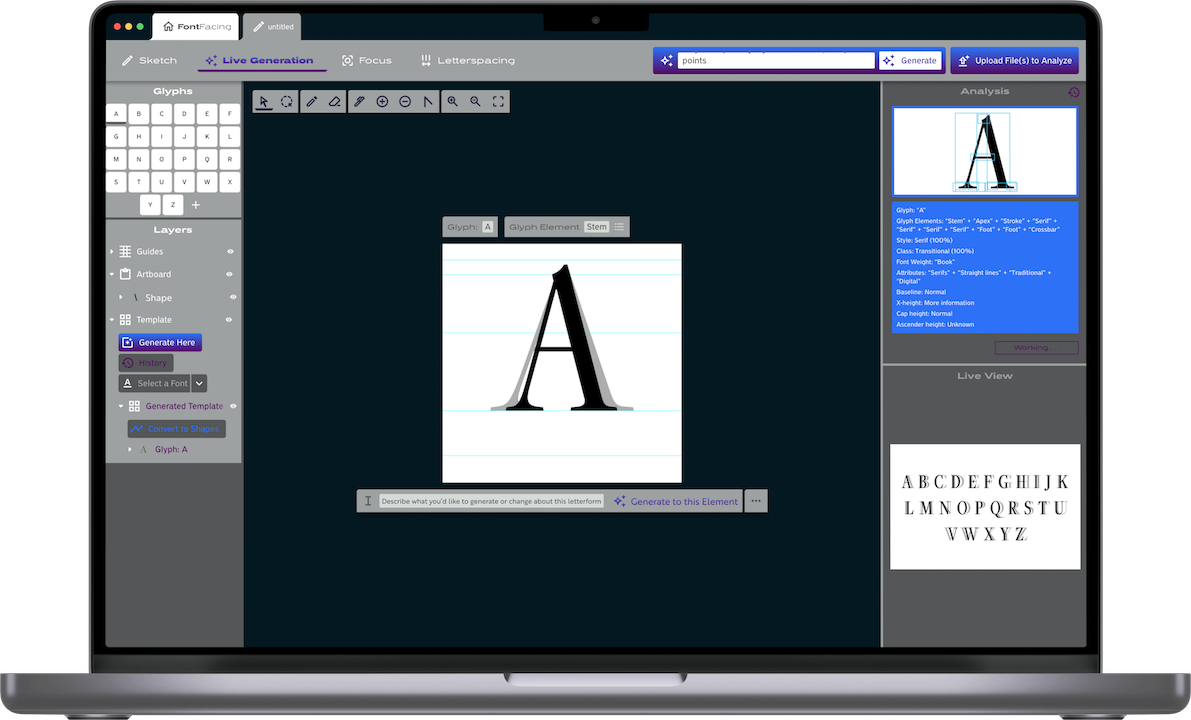

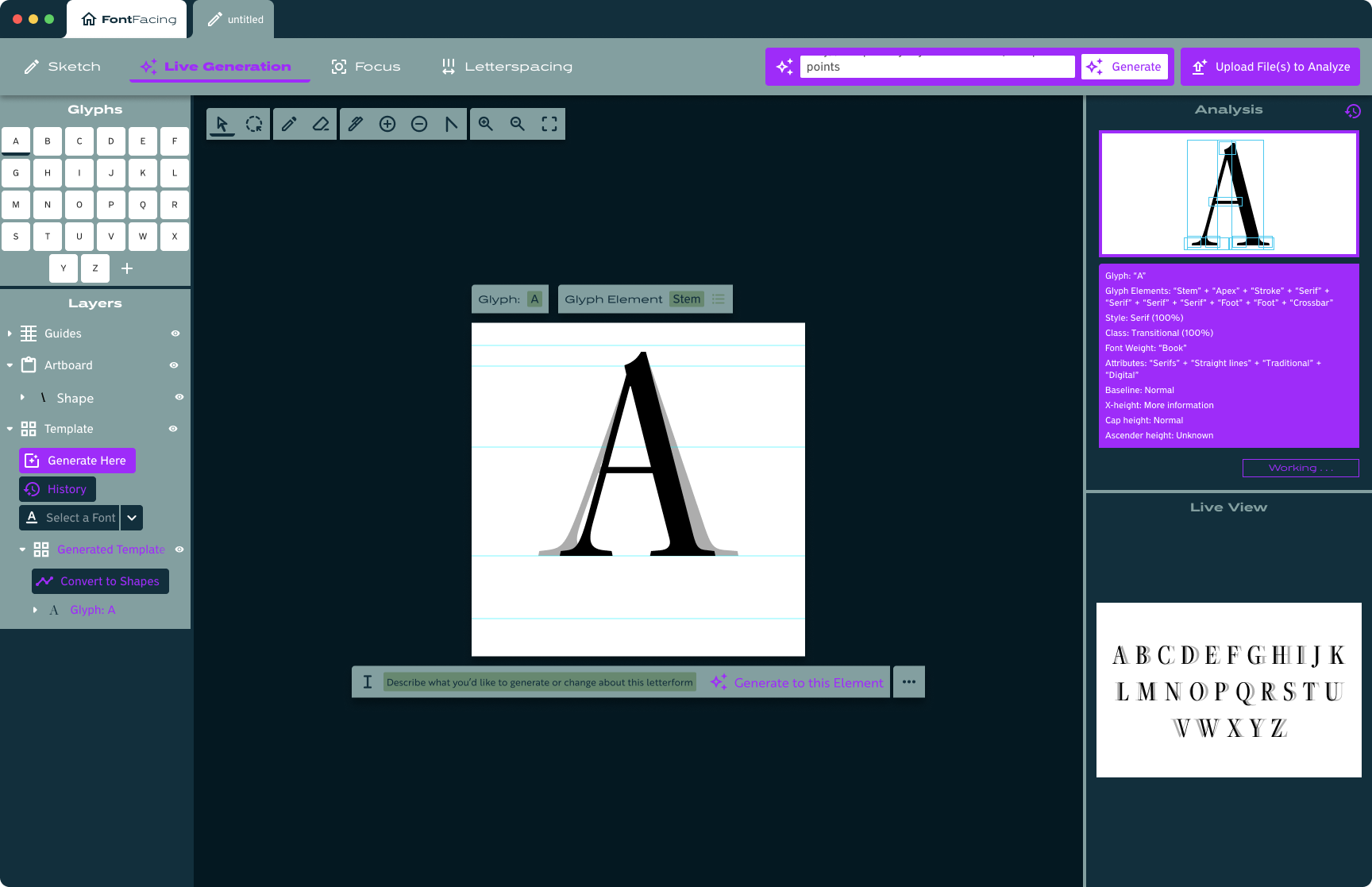

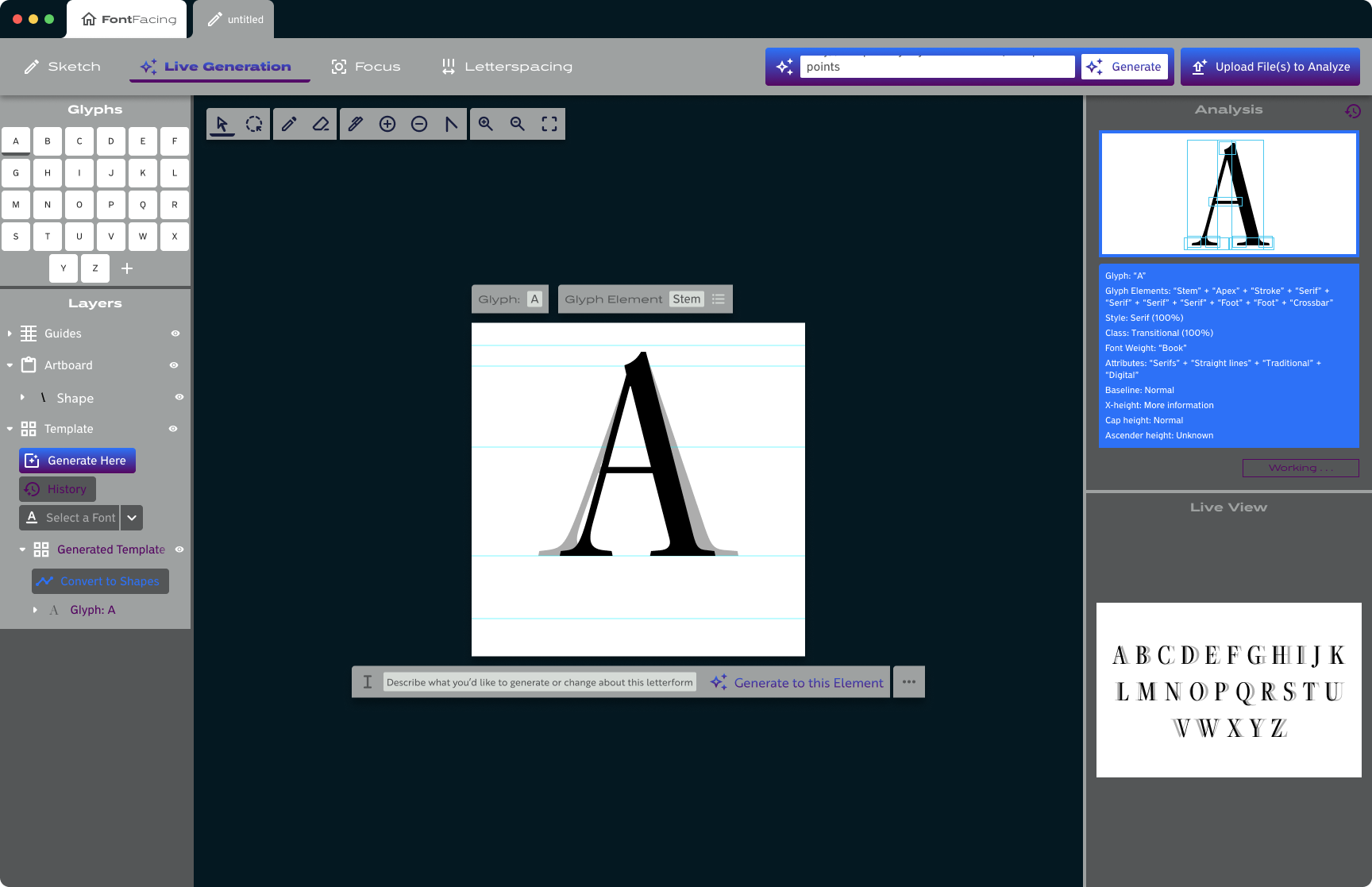

- Introduce a mode-based workflow with selections like Live Generation, Details, and more to toggle between AI-driven automation and hands-on manual refinement.

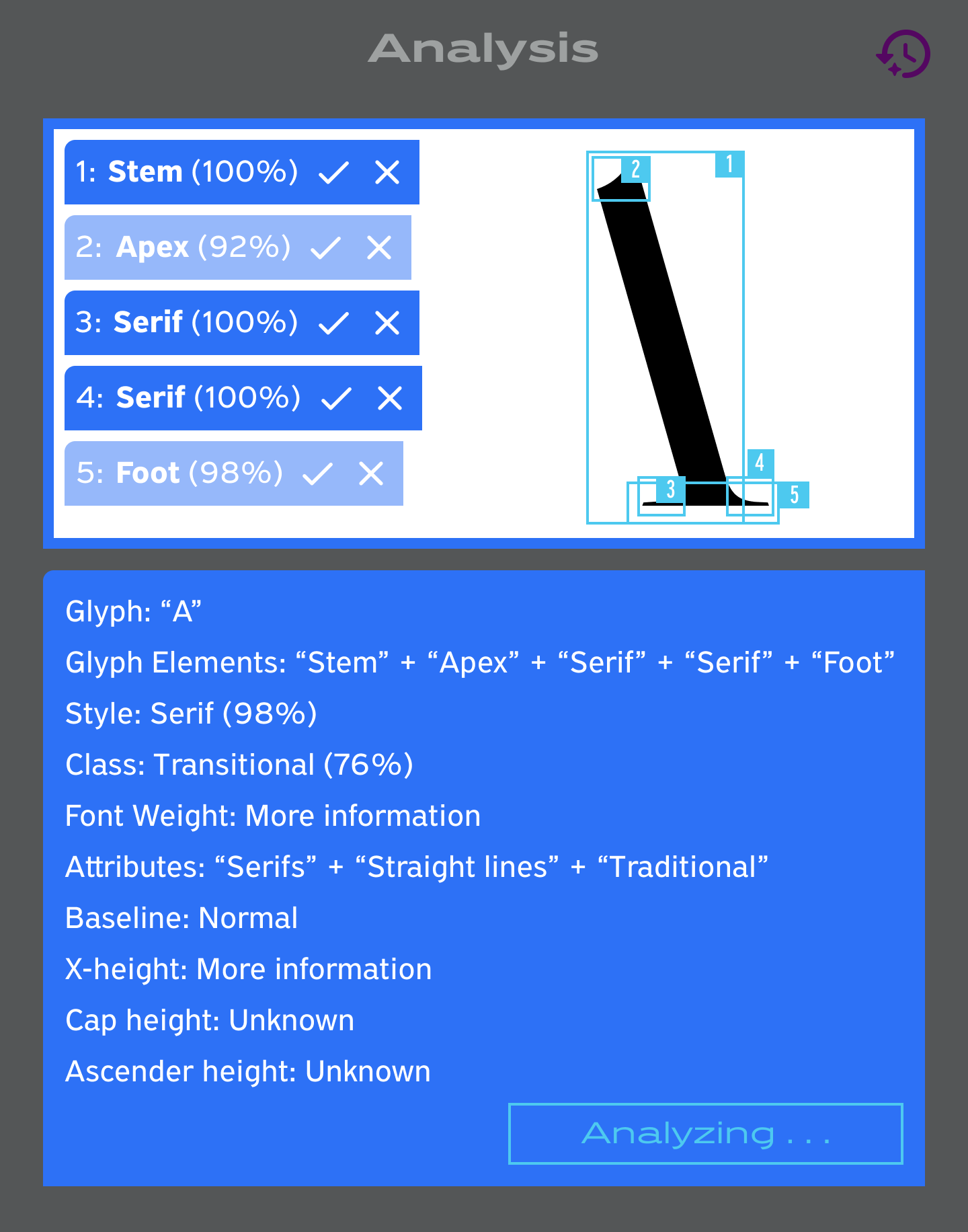

- Provide feedback within the interface so designers can see how the AI interprets their inputs, understand changes made by AI generation, and confirm or deny AI suggestions.

- Allow designers to input through flexible methods to guide the AI, ensuring the outcome aligns closely with their vision.

The problem of designing a typeface

Designing a typeface, and maintaining consistency, is a time-intensive task that often requires weeks or months of meticulous work. Each glyph must harmonize with the rest of the character set, making it difficult for designers to maintain visual consistency and experiment freely.

How can we help type designers create a typeface in less time without losing their creative vision?

1/6

Discovery

Experimenting with Existing AI Generation

I created several training models to build up some observations and experience with existing AI generation to help inform my concept.

Preliminary Attempt(s)

To get started, I created a few different training models using a variety of related photos.

Type-Specific

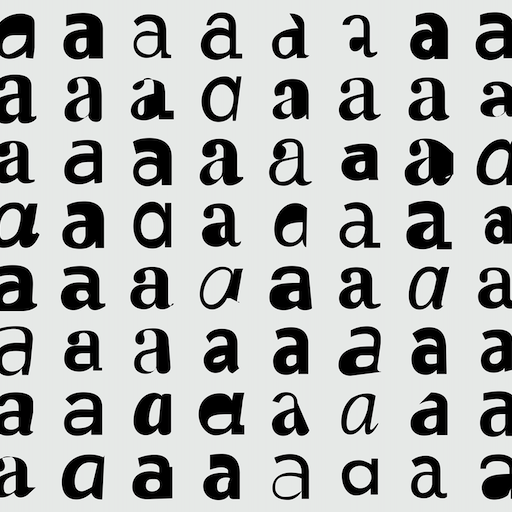

To test more basic shape creation, I made another model using jpgs of letters in an assortment of typefaces.

Current SVG Generation

As I narrowed down my concept to typography, I also narrowed it down to vector graphics, so I experimented with ChatGPT and Adobe's suite of beta tools for generating SVGs.

Existing Research in this Space

Since I’d been testing with basic letterforms, I searched for typeface generators and none existed at the time of this project. There were a lot of copy/text generators, but none for typeface design. In my search, I read through the work of Erik Bern, Måns Grebäck, and Jean Böhm, all of whom had conducted research and experiments into the capabilities of AI to generate letterforms.

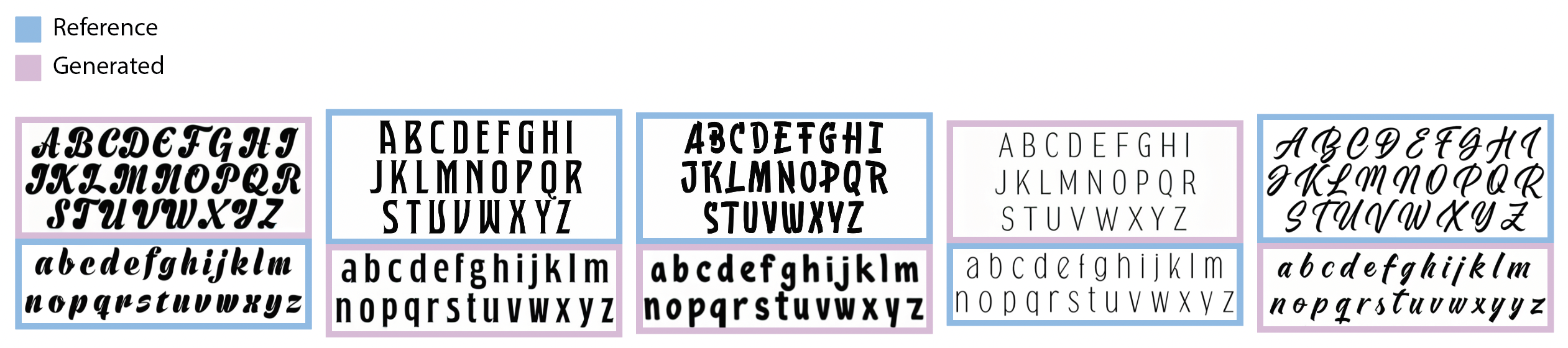

Left: experiments from Erik Bern to generate whole typefaces; Right: in-depth and extensive experiment from Jean Böhm to generate letterforms as actual vector graphics.

Above: Experiments from Måns Grebäck to see the abilities of AI to generate the lowercase of a typeface if given the uppercase, and vice versa.

Insights

AI systems often prioritize their capabilities over user interaction, leading to basic input methods. Shifting focus towards empowering users with what they can achieve using AI can enhance the design for more intuitive and impactful interactions.

Every word or pixel included in training data influences the output, especially concerning outliers, as AI lacks the ability to identify outliers without specific training.

The “magic” of AI comes from not knowing how input is being analyzed and processed, which can be confusing for designers. Transparent and understandable AI interactions will help enhance trust and comprehension.

For best results, users must go beyond data selection and make use of ongoing supervised learning and reinforcement of the model (which, for some AI systems, can be impossible for the user). This approach not only refines pattern recognition but also ensures consistent performance.

2/6

Exploring Low Fidelity

Low Fidelity Exploration

These explorations ignore the research

I quickly realized that I wasn’t designing with my insights in mind. I scrapped these ideas and started over by mapping the current workflow of typeface design. This would allow me to consider where AI could support the current process.

3/6

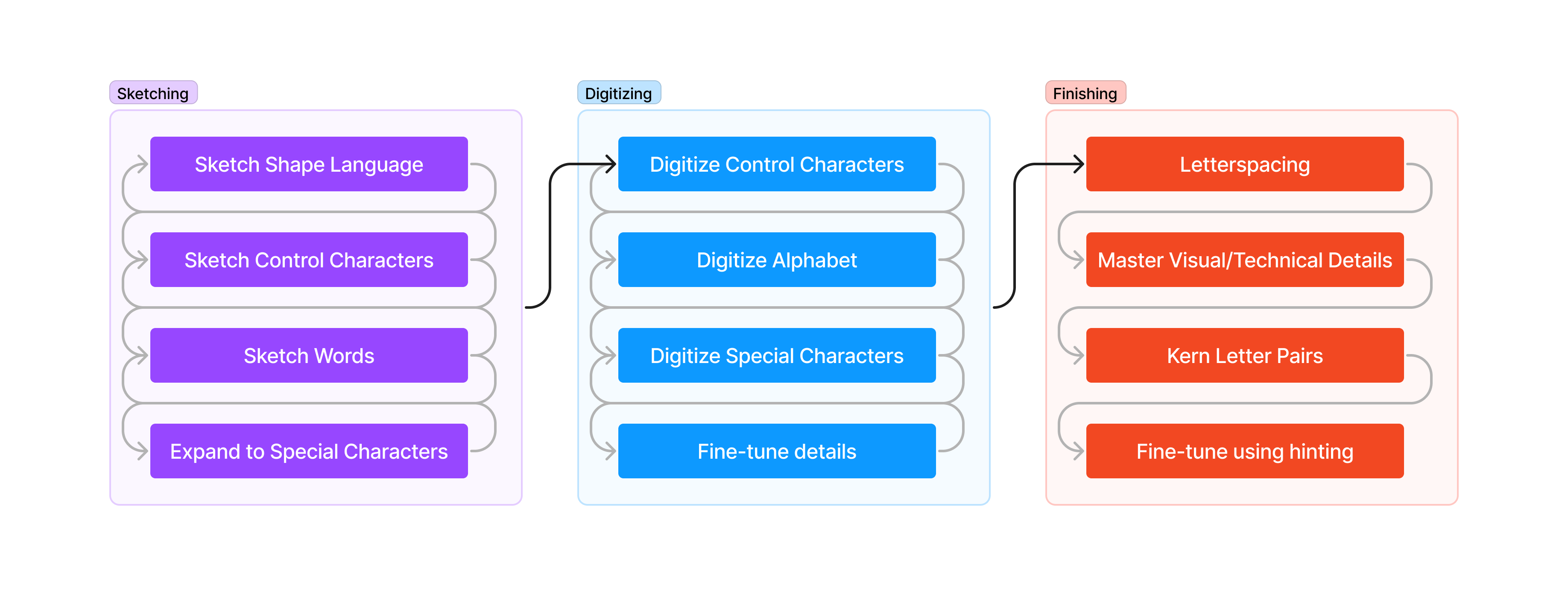

Mapping the Workflow

The Visual Design Aspects are not Linear

The sketching and digitizing stages of typeface design are extensive and may require multiple passes. Depending on the designer, the entire set of characters (lowercase, uppercase, numbers... 26-70ish characters at minimum or in the hundreds depending on the needs of the typeface) may need to be sketched multiple times.

Repeating Visual Design Elements

Many typeface designers will start the sketching phase by working on control characters, the design of which will help define other characters.

For instance, you can take the pieces of the lowercase ‘n’ to then work out the i, l, h, m, u, r, t, and you’d also have the x-height for other lowercase letters. After working on the lowercase ‘o’ you’d then have the building blocks for b, p, d, q, c, and e. You’d have to work on some of the finer details, but just from those two, the lowercase ‘n’ and ‘o’, you’d have a lot of the major work done for 15 characters, with the pieces necessary to work on many others.

Typefaces in the Context of AI

A typeface is a visual pattern which repeats to make up all of the characters of that typeface. The consistency with which that pattern is applied to the characters is what defines the visual style (and the feelings it imparts on the reader) of a font.

A training model should be able to figure out the repeating visual cues from a relatively small sample size. Done correctly, an AI system should therefore be able to speed up the workflow of typeface design.

With the process in mind

what is a typeface?

A consistent collection of design elements that repeat and, as they combine, form the characters/letters that make up written language.

4/6

Back to Designing

Identifying the Concept

Since AI is fantastic at pattern recognition and reproduction, and the typeface design process is a back and forth dance of refining the consistency and visual design of the elements which will invariably end up repeated throughout a typeface, it was an almost natural leap to the refined concept behind the application: the AI can analyze the pattern of your design, while you design, and use that pattern to build out and make alterations to the rest of the character set.

More Detailed Iteration

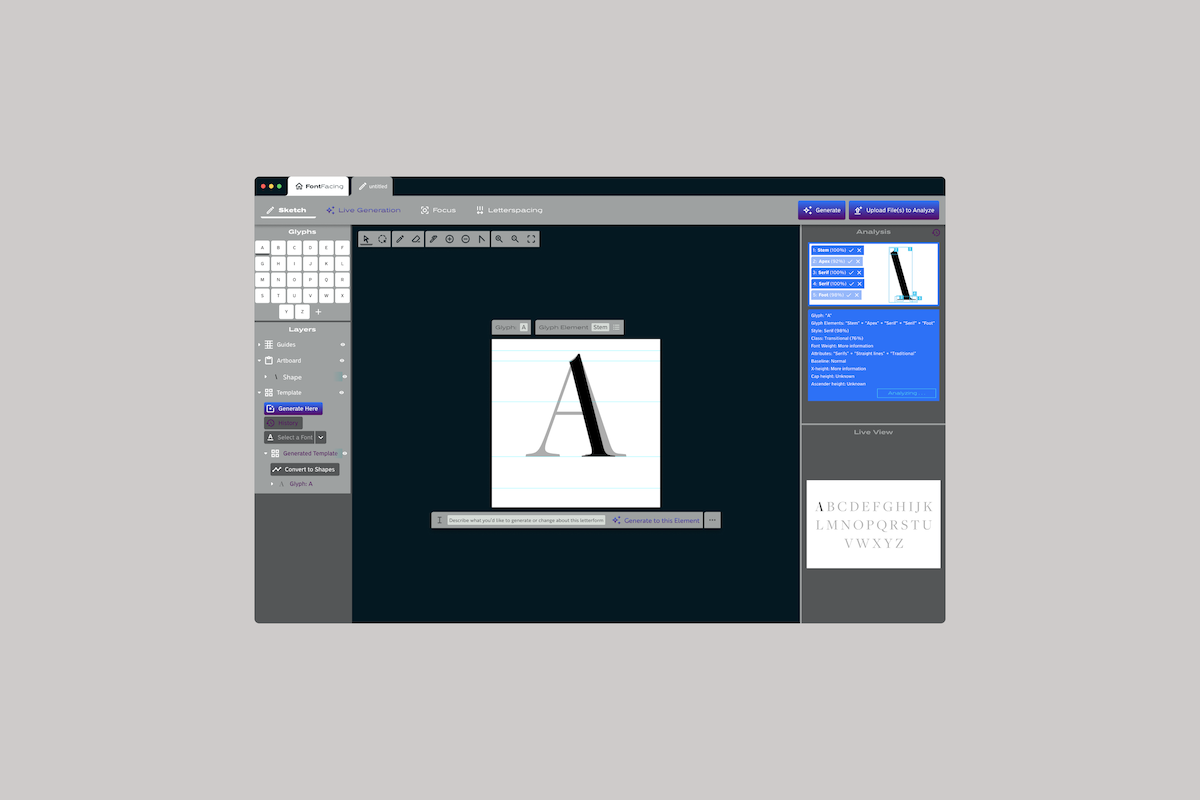

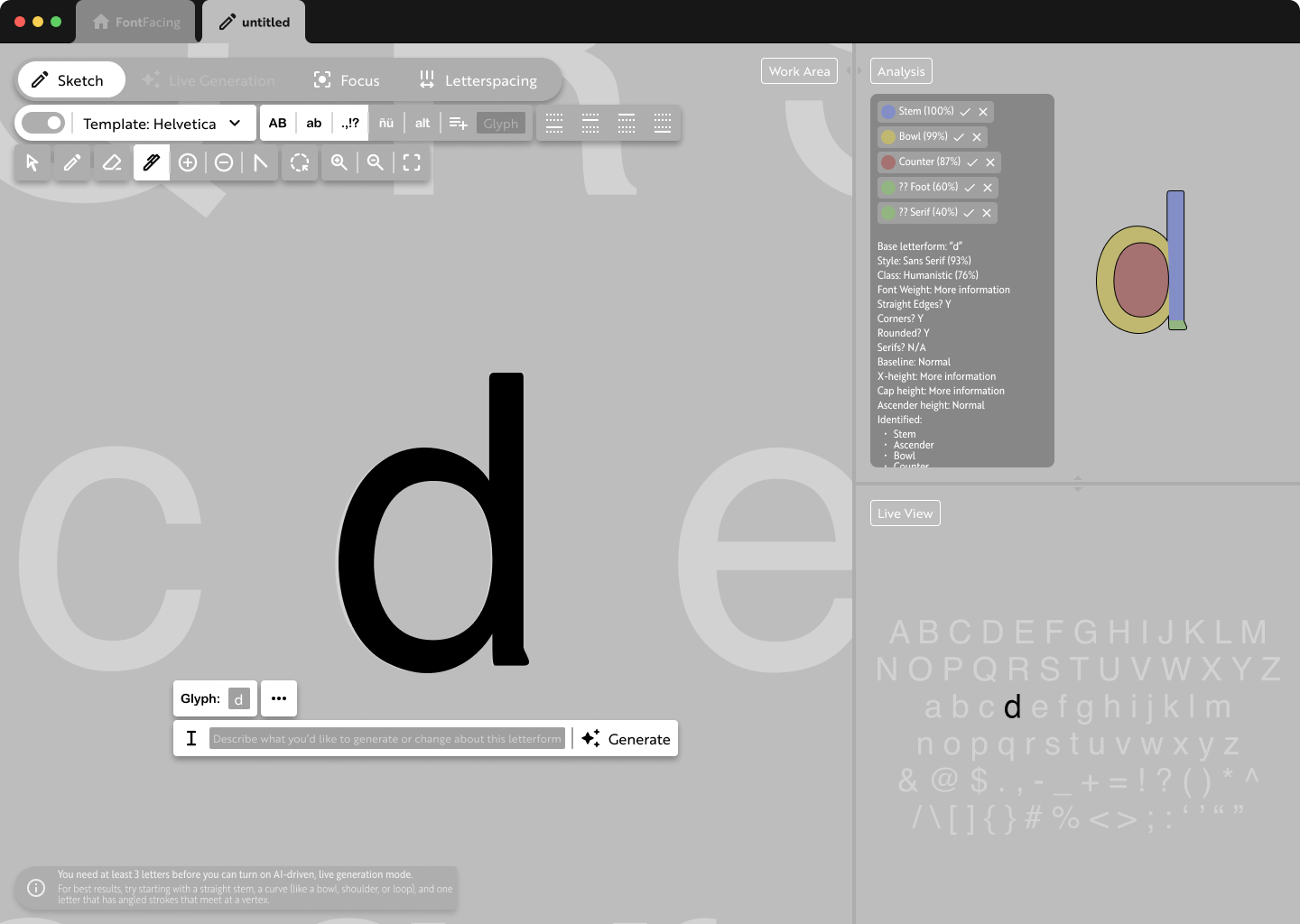

Moving towards medium fidelity, I introduced a sidebar to preview how the AI is interpreting data. It also allows designers to have manual input, allowing or disallowing interpretations of the data.

Existing Typeface Design Platforms

I looked at the interfaces of existing typeface design platforms, and design apps in general, to get a better sense of what designers are used to designing with. This would enable my design to be easily adopted.

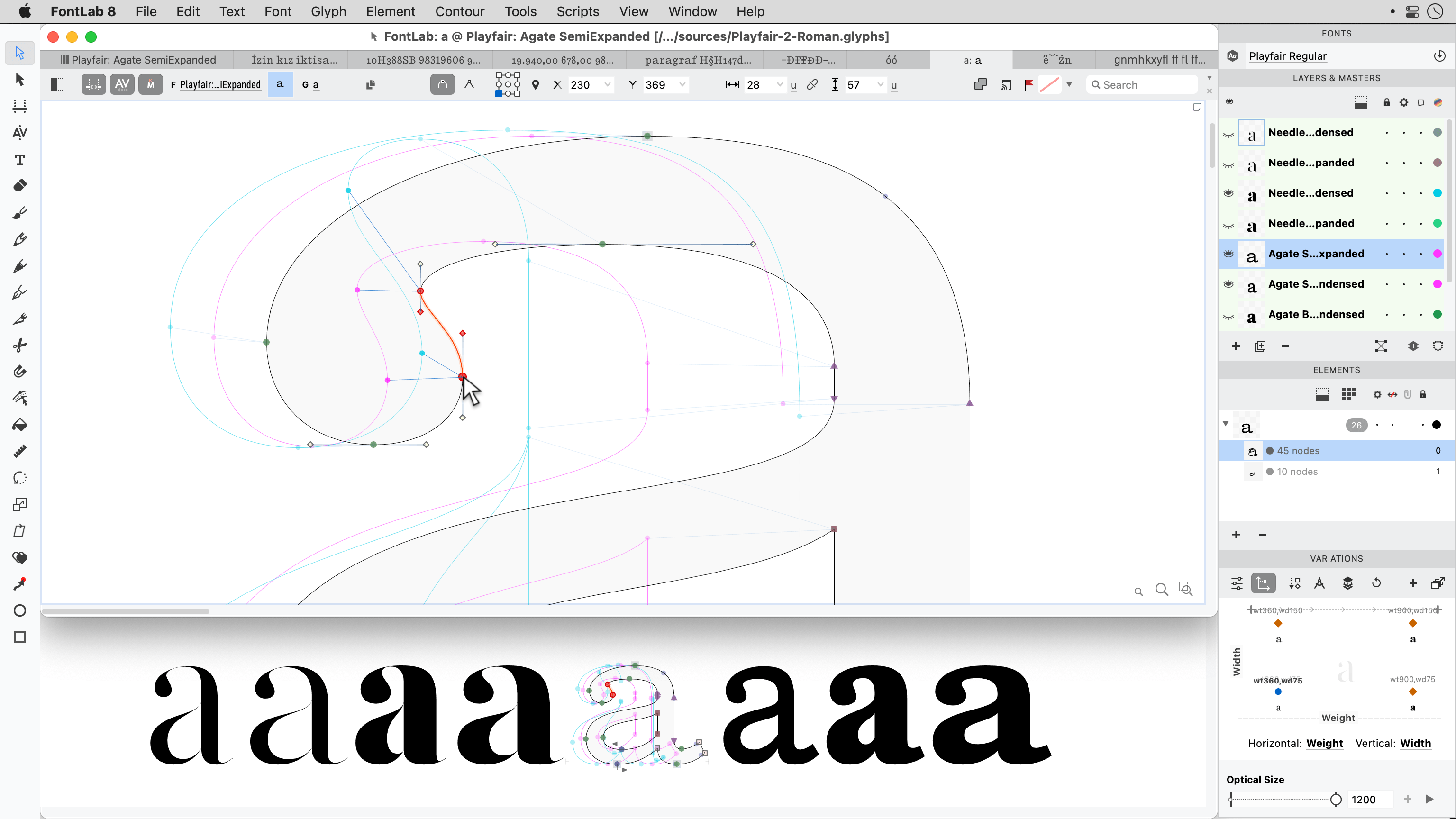

Making fine adjustments between font styles of a typeface using FontLab.

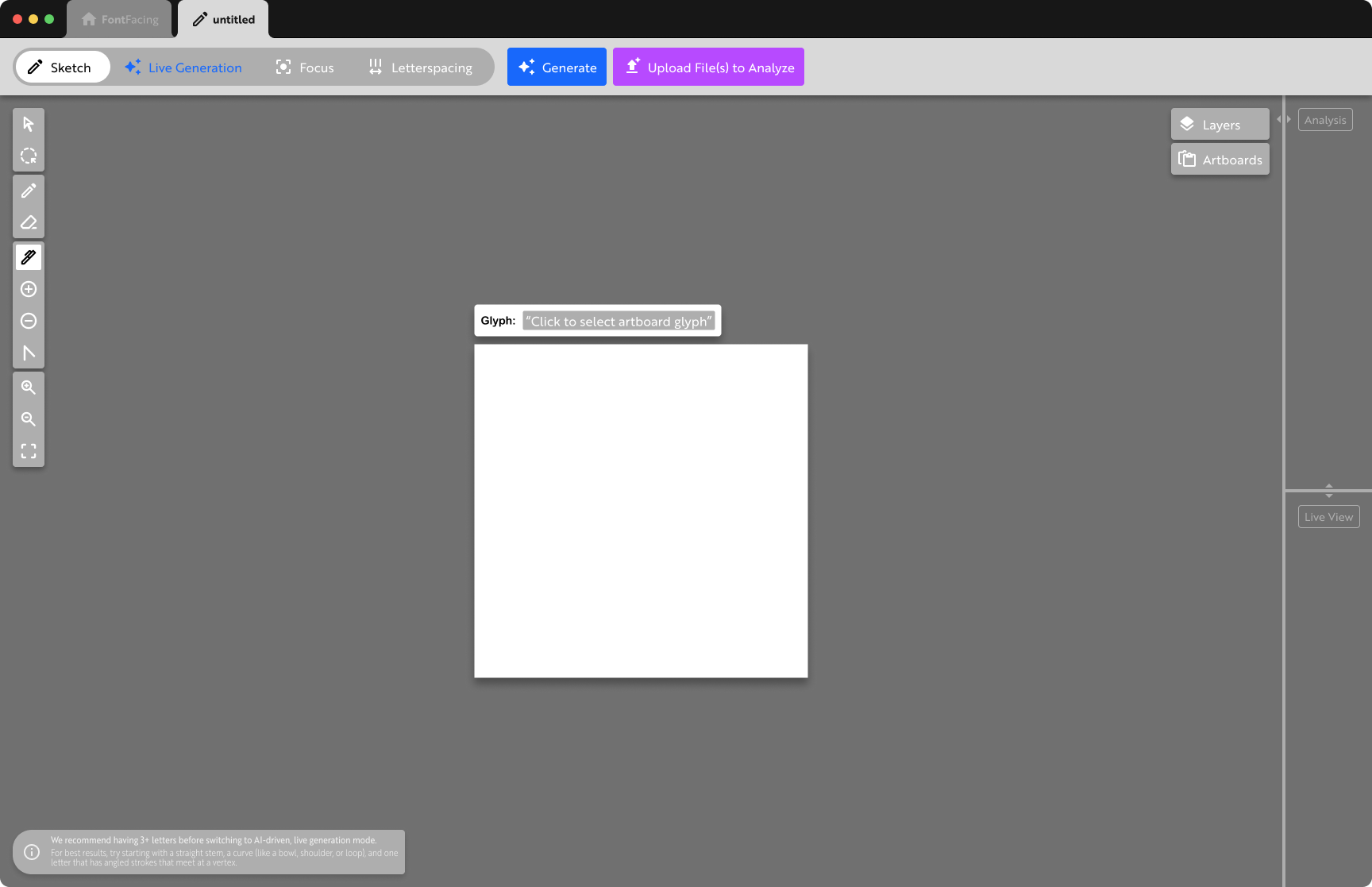

Moving from a Browser to the Desktop

At this stage it’s necessary to iterate towards a desktop application instead of a webapp to allow designers to work offline.

This iteration also features the introduction of the “Stage” slider to tell the platform which stage of the process you’re in.

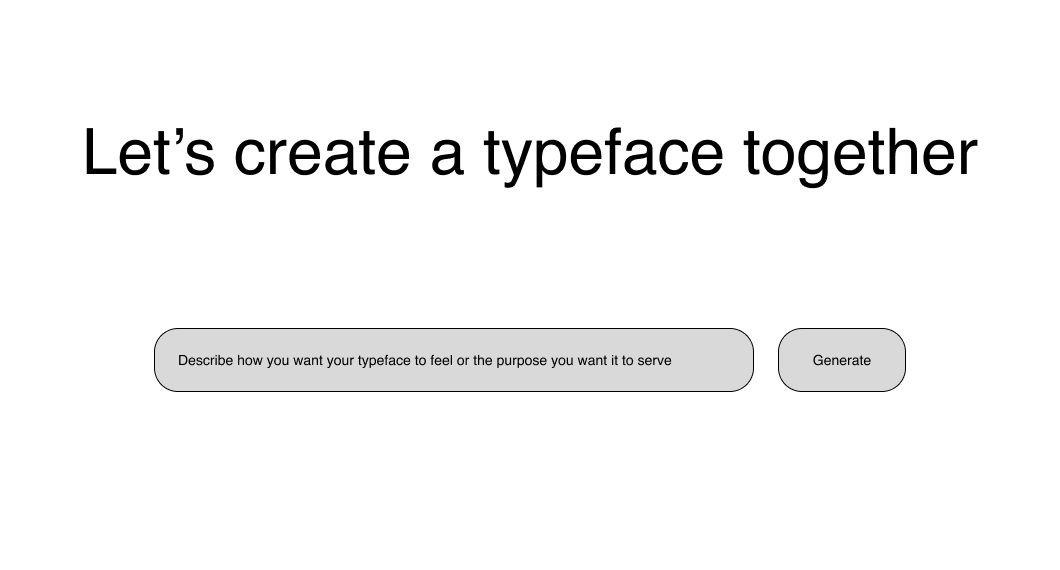

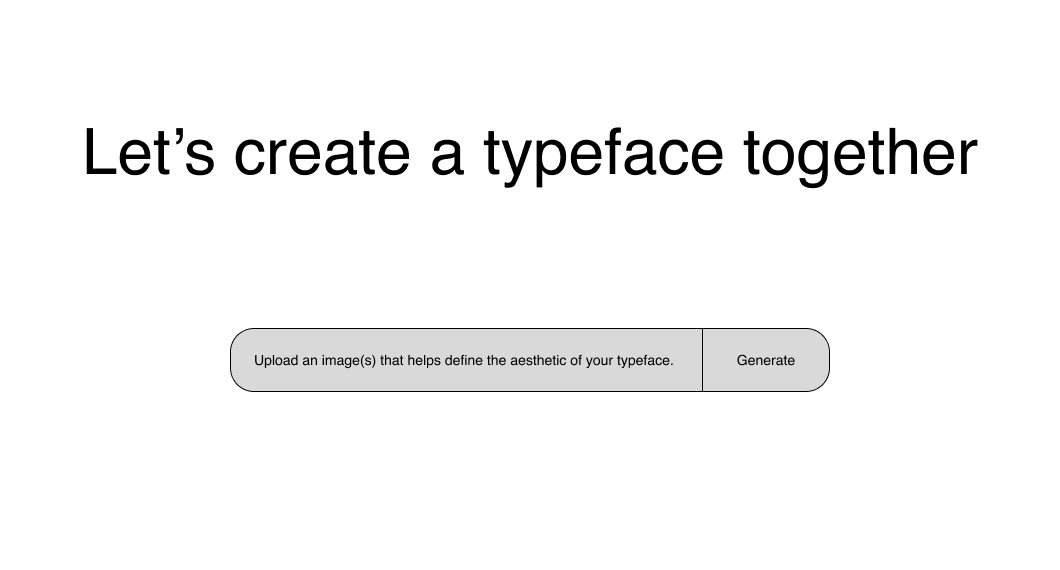

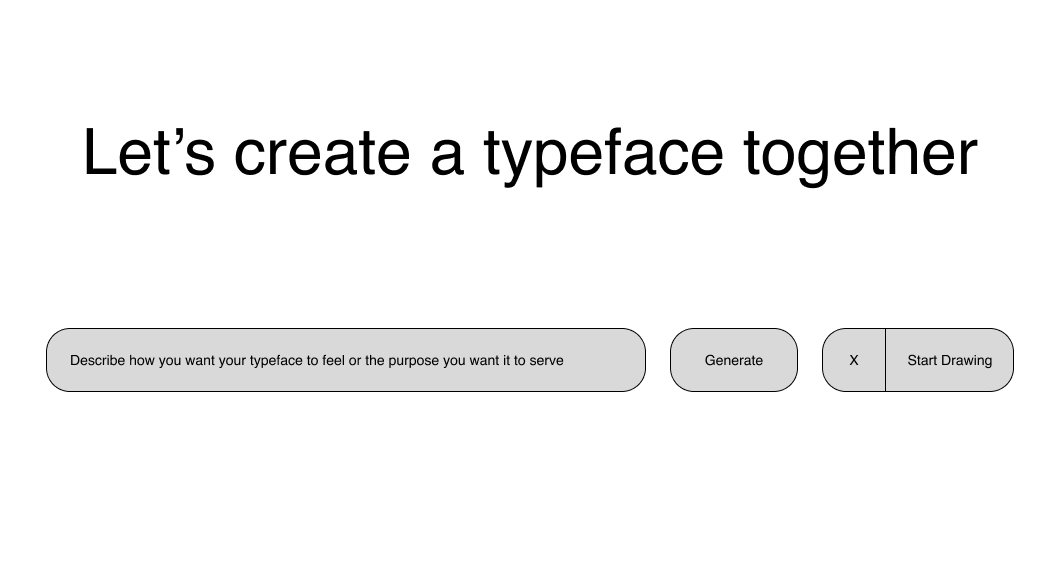

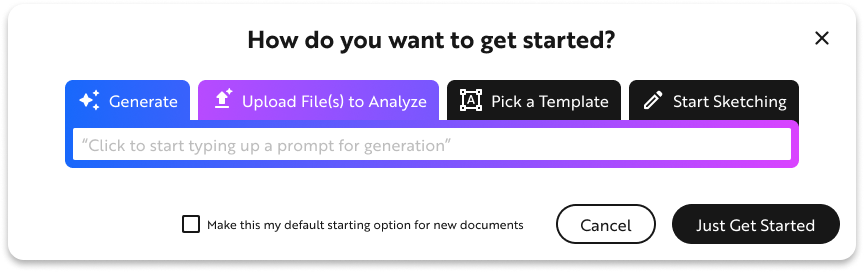

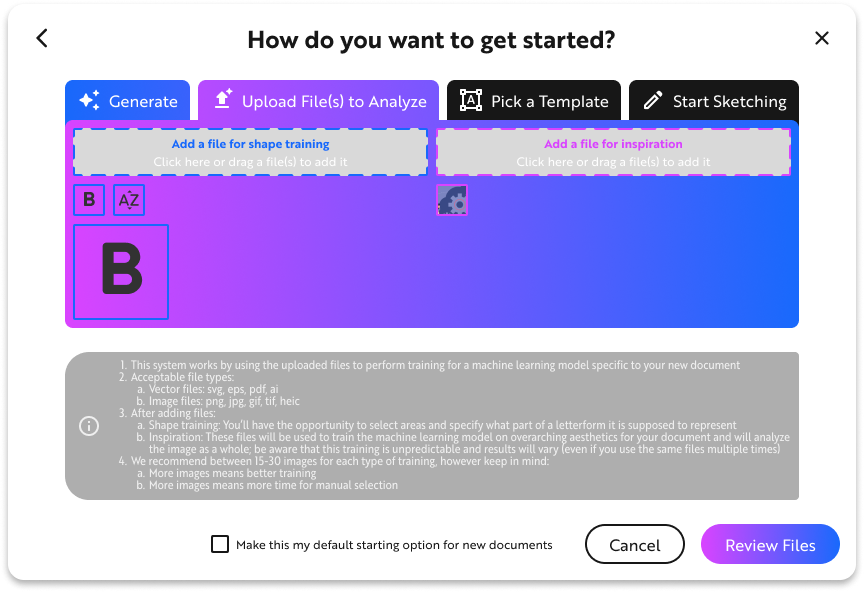

Starting Pop-Up

In an effort to highlight that there are AI features, I experimented with the idea of a pop-up modal which would appear whenever starting a new file. Unfortunately, this could lead them into thinking they can only select one particular way to work.

Although the modal wouldn’t end up staying, the use of color to indicate AI features ended up being an important feature.

5/6

Prototyping

Rethinking the Workflow

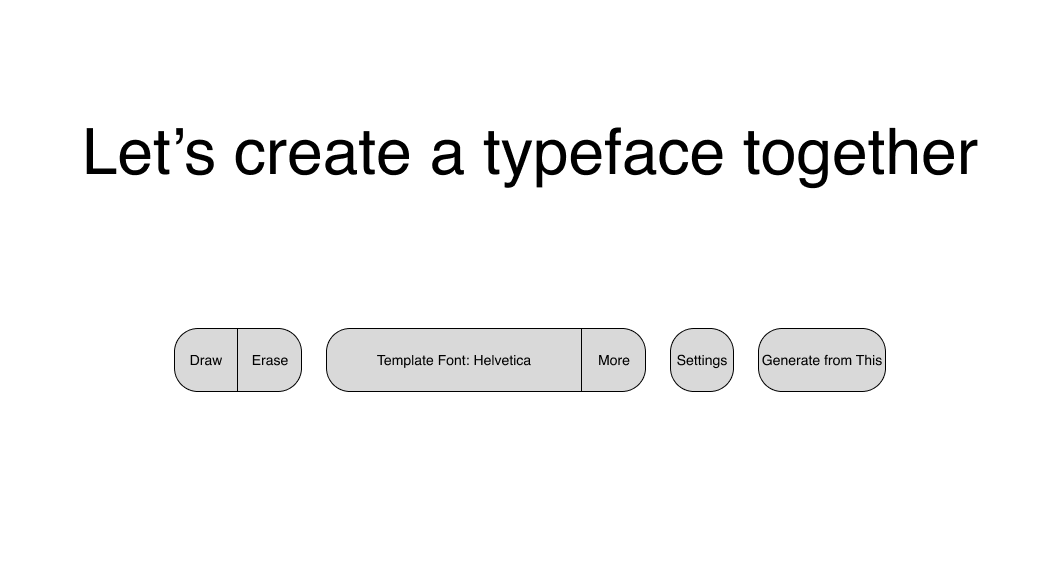

This platform should be seeking to empower the designer, however they want to work. To obstruct their design process with a pop-up goes against that. I mapped out a more circular workflow for the design process with AI seamlessly integrated into it.

This workflow assumes that the designer is going to be moving back and forth and, therefore, should have access to the AI features at all times, for whenever they might need them.

Exploration & Iteration

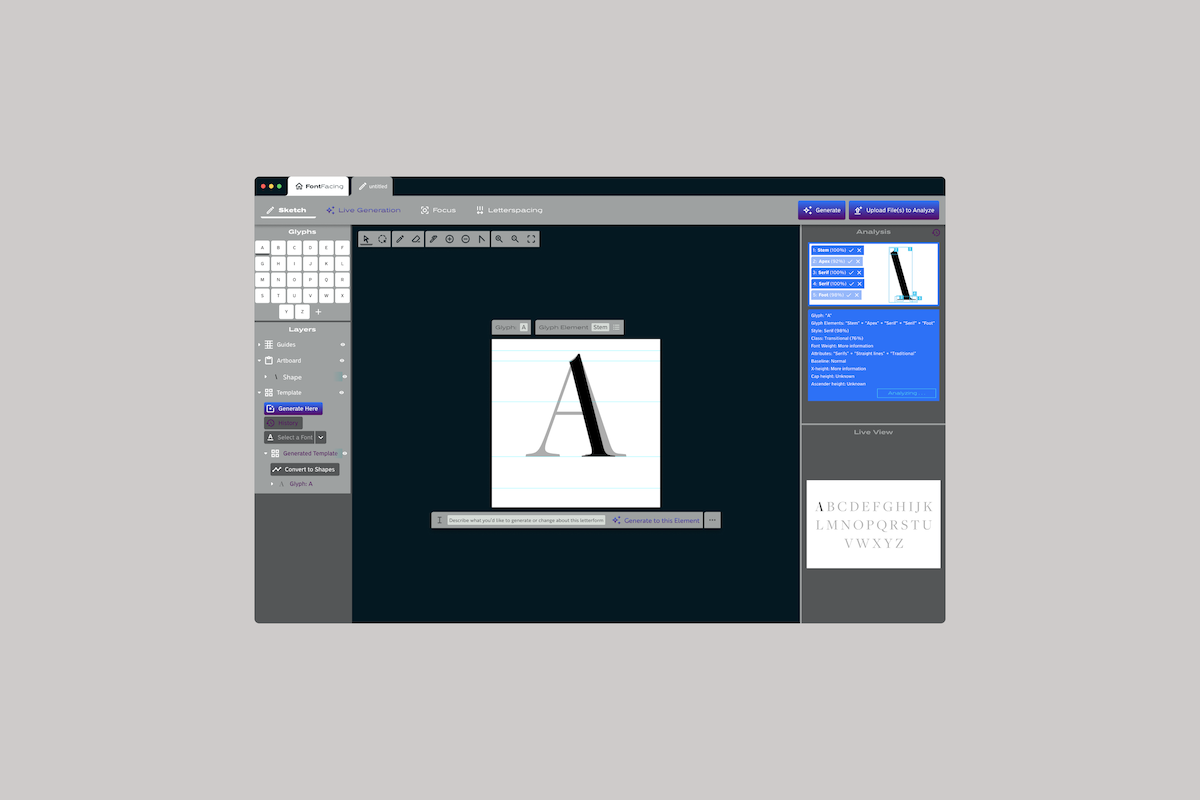

Finalized Layout

This exploration represents what would become the finalized layout of the interface. The previous buttons to access Layers and Character Set have become a separate sidebar on the left of the interface. I tried to depart from the primarily gray color palettes of most design platforms, but it’s overbearing here. There's too much color.

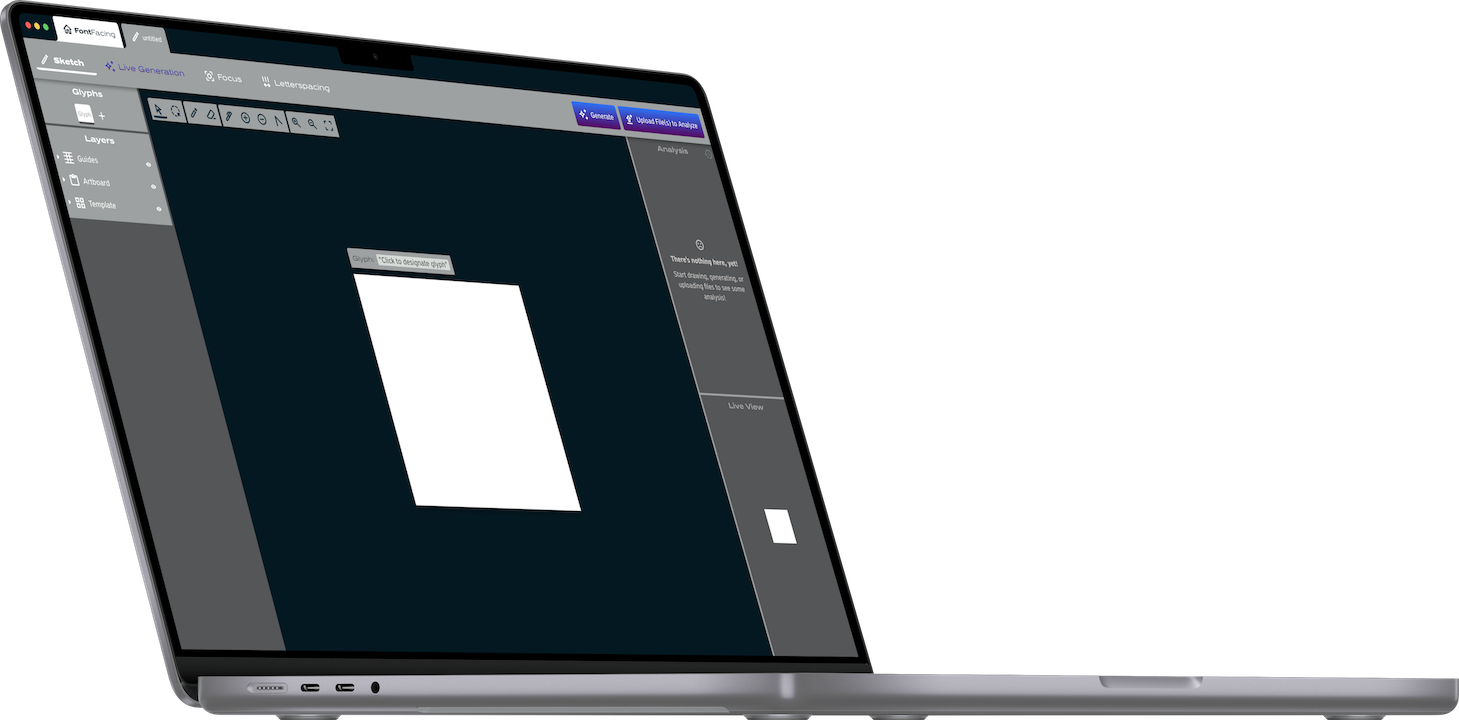

Finalized Interface

I pared back the use of color from the previous version and explored more ways to use the blue and purple to highlight the AI features.

6/6

Project Validation

After working on this project and developing the AI-related features, some interesting updates came to ChatGPT which helped to validate that, at least in part, I've explored aspects of AI features which the industry agrees are important.

ChatGPT-4

This is how ChatGPT worked at the time I conceived of FontFacing. You enter a prompt then see a small dot, amounting to a "Loading" animation, then get your generated response.

Insights for FontFacing

This is a concept I'd come up with for FontFacing, from an insight about the lack of feedback and transparency in existing AI tools which often make AI seem like magical interactions. That lack of feedback and transparency makes it difficult to refine your prompts/interactions because there's no way to know how the previous interaction was analyzed.

ChatGPT-o1 Preview

Late in 2024 OpenAI released a preview of their newest model, ChatGPT-o1. After entering a prompt, there's a flash of text-based feedback as if to say, "This is what you asked for, let me think about it."

Final Thoughts & Next Steps

I firmly believe FontFacing and similarly designed tools could successfully empower designers. There would be some difficulty surrounding the base training sets, since the platform would need extensive training on typefaces to understand the anatomy and character sets. Typefaces are intellectual property and their use for AI training could be contentious.

I would love to continue exploring this concept. The opportunities to explore micro-interactions are endless. This is also, roughly, the bare minimum the application would require to be considered a serious design application. Professional typeface design has a plethora of functions which aren’t represented here that would need to be worked into the interface; not everything can be folded into a menu.